Byte Size CI/CD - Part 2

Lets get our hands dirty

Hello again all, and welcome to Part 2 of how the CI/CD pipeline is built for this blog. In this article we going to run through all the different parts in more detail and also write some code 😱. Trust me, if I can do it, then you can too. So without waffling on too much, lets get right in.

What you will need

- GitHub account

- AWS account and public S3 bucket

- CircleCi account (just use GitHub to sign in)

- Code editor such as VS Code

If you don’t have any of the accounts mentioned above, please have a quick Google and create these if you plan to follow along. There are plenty of articles showing you how to do this, and in the future I might write some posts about how to create them, for quicker reference.

Lets go Hugo!

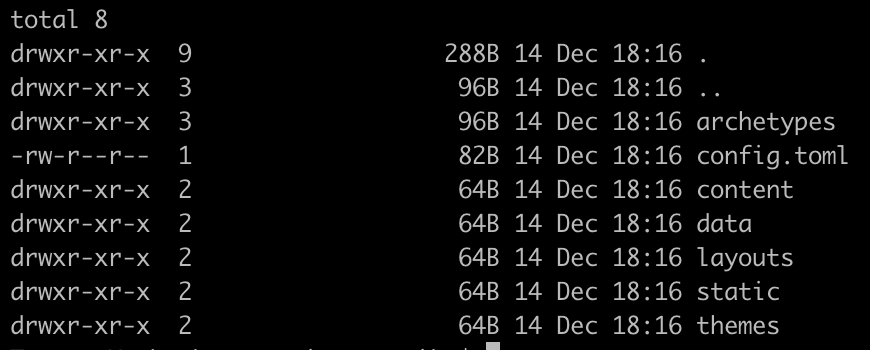

Now that we have all our accounts setup, lets download the Hugo framework and a start a very simple site. Just follow the simple setup guidelines found on the Quick Start page. Once you have that all setup you should have a folder structure something like this:

If you using a template, now is the time to follow the instructions of the developer on how to set up the template and where to save the downloaded files within the site files created above.

Push to GitHub

Once we have all the Hugo and template files in the correct place locally, we need to push these up to our GitHub repo for use in our CI/CD pipeline in the next stage.

Setup CircleCi workflow

Now that we have our Hugo site files in our repo, we need to create the CircleCI workflow config file to actually build and deploy our site.

What do we want to achieve from our workflow? Well we want to pick up when changes are made to our code in GitHub. Then depending on branches or tags, get CircleCI to run jobs that in the end will mean we have a website running on Hugo.

Before creating a new workflow, we need to create a context. This is a key/value store within CircleCI that we can attach to specific jobs. In our use case we will store a set of AWS credentials, AWS Region and AWS bucket name. So the keys will be:

- AWS_ACCESS_KEY_ID

- AWS_SECRET_ACCESS_KEY

- AWS_REGION

- AWS_BUCKET

The values to go with this will be unique to your AWS account 😄.

Then below is a copy of the .circleci/config.yaml that you will need in your repo, if you following a workflow like ours. You will see in the jobs section below, that the deploy job has the context we spoke about earlier. This means only this job will have the AWS credentials attached to it.

version: 2.1

orbs:

aws-s3: circleci/[email protected]

jobs:

build:

docker:

- image: cibuilds/hugo:latest

working_directory: ~/project

steps:

- checkout

- run:

name: Build Static Site Files

command: HUGO_ENV=production hugo -v -d public/

- persist_to_workspace:

root: .

paths:

- .

test:

docker:

- image: cibuilds/hugo:latest

steps:

- attach_workspace:

at: .

- run:

name: 'Test Site Files'

command: htmlproofer ~/project/public --allow-hash-href --check-html --empty-alt-ignore --disable-external

deploy:

docker:

- image: circleci/python:3.7.4

steps:

- attach_workspace:

at: .

- aws-s3/sync:

from: ~/project/public

to: 's3://${AWS_BUCKET}'

overwrite: true

workflows:

version: 2

build_test_deploy:

jobs:

- build:

filters:

branches:

only: master

- test:

requires:

- build

filters:

branches:

only: master

- deploy:

context: byte-size

requires:

- test

filters:

branches:

only: master

So what is this doing? The jobs is where the magic begins. This is a very simple workflow with 3 jobs that all rely on the Master branch. If you want, you can make it more complex and use tags or different branching names. But for this workflow, when there is a commit to master, it will do the following in order:

-

build: which spins up a Hugo based docker container, then checkouts out the code from master branch. It then runs the Hugo build command to create the static files in the

publicdirectory. Then finally persists the data into a workspace to be used later. -

test: this spins up a new Hugo based docker container, but this time attaches the workspace created during the

buildjob. This means all the files inpublicstill exist and now we can runhtmlprooferagainst them to make sure they are valid HTML files. -

deploy: then finally we spin up the final docker container based on python3.7.4 and again attach the workspace from the

buildjob. However this job is a bit more special as it uses a CircleCI Orb calledcircleci/[email protected]. This is a prebuilt contained set of commands that can be reused with a couple lines of code. In our use case, this is to copy the files to our S3 Bucket.